Optimizing EF4 and EDMGen2

Just reading (from Adrian Florea links ) how the EF4 could be optimized : http://www.codeproject.com/KB/database/PerfEntityFramework.aspx

First modification is to “Pre-generated Your View”. For this you must have .ssdl, .csdl, and .msl files – so you change the “Metadata Artifact Processing property to: Copy to Output Directory.”. Then you process the .ssdl, .csdl, and .msl with edmgen in order to can have the views.

Until here , all ok.

But, in the next advice, is to keep “Metadata Artifact Processing property to: Embed in Output Assembly.”

One solution is to put “Metadata Artifact Processing property to: Copy to Output Directory.” , compile, put again “Metadata Artifact Processing property to: Embed in Output Assembly.” and compile again. But , if you change the edmx (add fields or tables ) you must redo the operation – so you will have more things to do(if you remember)

A solution is to build them on the pre-build step .But how to generate the .ssdl, .csdl, and .msl files ?

Edmgen2 , http://code.msdn.microsoft.com/EdmGen2 , to the rescue. Download, put into a lib folder under your solution folder and put a pre-build like this :

$(SolutionDir)lib\edmgen2\edmgen2 /FromEdmx $(ProjectDir)prod.edmx”

“%windir%\Microsoft.NET\Framework\v4.0.30319\EdmGen.exe” /mode:ViewGeneration /language:CSharp /nologo “/inssdl:prod.ssdl” “/incsdl:prod.csdl” “/inmsl:prod.msl” “/outviews:$(ProjectDir)prod.Views.cs”

What you must change on your project?

1. IF you are on 64 bit, change Framework to Framework64

2. change the prod with your edmx name.

What is the performance?

Tested by loading a table with 301 rows by doing the steps :

1. open connection, load all table in objects(POCO), closing connection

2. open connection , find object with PK = 1, closing connection

3. open connection , loading 1 tables with 2 related (include ) , closing connection

The results are in milliseconds:

Without pre-compiled views

| LoadTable | LoadID | LoadMultiple | Total Time |

| 579 | 800 | 172 | 1551 |

| 563 | 755 | 171 | 1489 |

| 559 | 754 | 169 | 1482 |

| 568 | 762 | 240 | 1570 |

With pre-compiled views:

| LoadTable | LoadID | LoadMultiple | Total Time |

| 606 | 807 | 183 | 1596 |

| 509 | 706 | 177 | 1392 |

| 852 | 137 | 192 | 1181 |

| 530 | 733 | 221 | 1484 |

| 523 | 722 | 183 | 1428 |

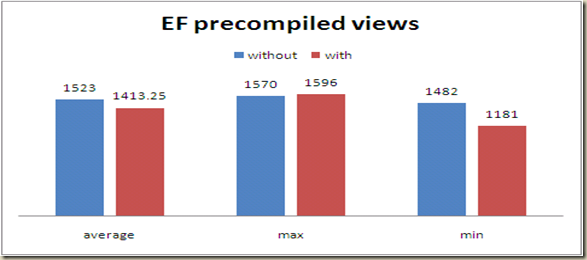

The average / min / max results:

| average | max | min | |

| without | 1523 | 1570 | 1482 |

| with | 1413.25 | 1596 | 1181 |

In the next picture the smaller the duration(milliseconds), is the better :

Conclusions:

1. For the average and min the difference is 7%, respectively 20%. Please remember we are using only 3 queries.

For the max, it is vey curious : the with is more than without. The penalty is 1%, I think that is a measuring error ? – or maybe not. However , the penalty is small comparing with others.

2. Very curious, find after ID, with a table with 301 rows, took longer than loading the whole table.However, did not take into accound finding in the list the object( it is in memory also)

3. It may worth to add the pre-build step shown before to pre-compile views.

Links :

http://www.codeproject.com/KB/database/PerfEntityFramework.aspx